The Intelligent Control Systems (ICS) group aims to develop machine learning and decision algorithms for machines in the physical world. Our research often starts with fundamental theoretical questions on learning and control, leading us to develop new methods and algorithms, which we finally implement and demonstrate on physical machines such as robots, vehicles, and other autonomous systems.

When learning on physical machines, some special challenges arise, which are different from other machine learning domains typically involving pure software or computer systems. For example, learning in the real world often has to cope with imperfect and relatively small data sets, because physical systems cannot be sampled arbitrarily and exhibit high-dimensional and continuous state-action spaces. A constant stream of data (e. g. from sensors) requires online and lifelong learning, but often on embedded hardware with limited computational resources. Finally, theoretical guarantees on safety, robustness, and reliability are essential for physical learning systems, but often not available in standard machine learning. These are some of the fundamental challenges that arise when artificial intelligence meets the physical world – and that drive our research. In addition to learning, control, and decision making for a single physical system, we are also interested in distributed and networked problems, for example, where multiple intelligent agents cooperate to achieve a common goal. How can a team of robots efficiently coordinate their actions? What information should they exchange, and when? And how to design for limited embedded resources such as bandwidth, computation, or energy? These are some of the questions that we address in this research direction.

As we seek to bridge computational and physical intelligence, research at ICS is highly interdisciplinary. In particular, we combine and intersect the disciplines of machine learning, systems & control theory, applied mathematics, and robotics. The main directions of the research at ICS can be summarized as:

- Learning-based control: machine learning and control theory for learning on physical systems with guarantees;

- Distributed intelligence: learning, control, and cooperation across multi-agent and cyber-physical networks;

- Resource efficiency: achieving high performance control and learning with limited resources (embedded computation, small data, communication bandwidth, energy).

The ICS group was founded in February 2018, when Sebastian Trimpe was appointed as a Max Planck Research Group Leader (W2) at MPI-IS Stuttgart. The Max Planck Research Group is primarily funded through the Cyber Valley initiative. Since its start, the ICS group grew rapidly to around eight PhD and postdoctoral researchers, as well as many students and interns. In May 2020, Sebastian Trimpe accepted a full professorship at RWTH Aachen University, where since he has been building up a new Institute for Data Science in Mechanical Engineering. ICS has been continued for a transition period with the agreed end date of April 2022. With several researchers transitioning from Stuttgart to Aachen, the ICS group formed the foundation for an entire new institute at RWTH. We are grateful for the opportunities that we have had at MPI-IS. The ecosystem allowed us to grow quickly, form a strong team, and have impact in several new research directions at the intersection of Machine Learning and Automatic Control.

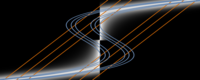

Adaptive Locomotion of Soft Microrobots

Soft robots are a class of robots constructed from highly deformable materials, inspired by the bodies of living organisms. In \cite{2016palagi2}, Palagi et al. have introduced soft microrobots based on stimuli-responsive polymers. Projecting a light field onto the surface heats part of the microrobot's body therefore creating a... Read More

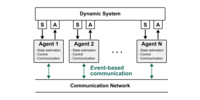

Networked Control and Communication

Future intelligent systems such as autonomous robots, self-driving cars, or manufacturing systems will be connected over communication networks. Facilitated by the network, the individual agents can coordinate their actions and thus achieve functionality exceeding the individual unit (for example, driving in formation or collaborati... Read More

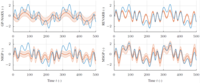

Controller Learning using Bayesian Optimization

Autonomous systems such as humanoid robots are characterized by a multitude of feedback control loops operating at different hierarchical levels and time-scales. Designing and tuning these controllers typically requires significant manual modeling and design effort and exhaustive experimental testing. For managing the ever greater c... Read More

Event-based Wireless Control of Cyber-physical Systems

Cyber-physical systems (CPS) tightly integrate physical processes with computing and communication, thus, enabling emerging applications such as coordinated flight of autonomous vehicles or controlling factory automation machinery over wireless networks. The adoption of wireless technology offers unprecedented flexibility in sharing... Read More

Model-based Reinforcement Learning for PID Control

Proportional, Integral and Derivative (PID) control architectures cover a significant portion of today’s industrial control applications. The PID control law for a Single-Input Single-Output (SISO) system is given by \begin{equation} Read More

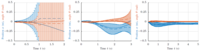

Learning Probabilistic Dynamics Models

In Reinforcement Learning (RL), an agent strives to learn a task solely by interacting with an unknown environment. Given the agent’s inputs to the environment and the observed outputs, model-based RL algorithms make efficient use of all available data by constructing a model of the un... Read More

Gaussian Filtering as Variational Inference

Decision making requires knowledge of some variables of interest. In the vast majority of real-world problems, these variables are latent, i.e. they cannot be observed directly and must be inferred from available measurements. To maintain an up-to-date distribution over the latent variables, past beliefs have to ... Read More